Back to the Data Model

A. The requirement is to design flexible database schema patterns

This text records a email conversation between Barry and someone with some interesting questions working for an Investment Bank

1. Email received February 22nd. 2011 :-

Hi,

I am currently working as a SQL ETL developer for an Investment Bank.

The bank has recently been taken over and thus the financial product reporting has been divided into

various reporting streams which has meant various feeds have had to be created to supply the various new stake holders of the bank.

Whilst we are able to produce these feeds I feel the current database architecture (set up by my predecessor) is perhaps not the best solution.

This is simply because I have had to constantly tweak the tables to get the desired output.

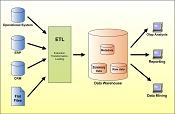

The database architecture is such that our sql servers are primarily used for ETL.

The source data comes from Hyperion enterprise Management system which countries use to load their figures.

The source data is uploaded into DDM (our SQL ETL environment) transformed and loaded into Essbase cubes.

Due to there being copies of data in both Essbase and Hyperion only the latest MI data is stored in SQL Server.

I am of the feeling that if we had a central repository of the data in sql, i.e. a data warehouse.

It would give us the flexibility to provide feeds via datamarts for all our customers as well as handling the metadata,

such as product hierarchies and location hierarchies which are used as dimensions in our OLAP cubes.

I have had a look at your datamodel for how to load a banking datawarehouse I just need a little advice on how to model this.

http://www.databaseanswers.org/data_models/data_warehouse_loading/index.html

The central source data we receive is in the following format:

Field Name

Format

Description

DATACATEGORY

char(10)

Scenario with Year and Client type;

< Material cut out here.>

We receive about 11 of these files each corresponding to a different region for reporting.

In addition to these files we also receive once a month meta data from our offshore team.

We thus have a window of maintenance were implement the new meta data changes.

These include the new product hierarchy structure, location structure and FX rates.

The location and product structure are then used to build the product and location dimensions

in our Essbase models.

These dimensions are both parent child dimensions.

I would imagine we would have fact table that shows the YTD amounts for each product in each category.

Our dimensions would be product, account and location.

For the product and dimension tables and Location would the data be stored?

Should it be in hierarchical form? Is the Metadata stored in a separate datawarehouse?

Any advice or information you could provide would be very much appreciated.

Many thanks

Your friend

2. Barry's respponse :-

Hi

Your ideas are on the right lines.

I have drafted a Data Architecture for you :-

http://www.databaseanswers.org/data_models/data_warehouse_loading_alt/index.html

The idea of 'de-coupling' Hyperion at the Back-end from Essbase at the Front-End gives

you very valuable flexibility.

HTH

Barry

3. Email received February 23rd. 2011 :-

Hi Barry,

Many thanks for your quick reply. I certainly do not have a budget for 500/day but I can definitely cover your pocket money : - )

For me the main thing is to just streamline the processes that we have running now whilst maintaining

the flexibility we currently have now particularly with regard to 'on request' refreshes etc

For example the location structure and product structure we use to create our dimensions in essbase are actually present in

Hyperion and can be extracted.

Yet for historical reasons updates to the dimensions are . manually.

If we can extract the structures directly from the source then they could be loaded into a datawarehouse, sliced and diced and

then used to auotmatcally update dimensions without the need for manual intervention.

I am also very interested inthe staging area that you proposed. How many tables are needed, what lookups are used etc.

Barry Williams

February 22nd. 2011

Principal Consultant

Database Answers